Automated facial recognition technology comes of age

Arthur Piper, IBA Technology Correspondent

Automated facial recognition technology is facing a legal challenge in the UK, while some law enforcement authorities in the US are banned from using it. Global Insight assesses the technology and the risks it poses.

In a well-publicised court case in May 2019, Ed Bridges, an office worker from Cardiff, challenged the right of the South Wales Police to use automated facial recognition (AFR) technology in public places. His crowdfunded legal action was supported by the human rights watchdog Liberty. Bridges said he was distressed that the police had used the technology to capture his image on two separate occasions.

Dan Squires QC, representing Bridges, claimed that the technology breached data protection and equality laws – and that Bridges had a reasonable expectation that his face would not be scanned in a public place without his consent if he was not suspected of wrongdoing. For the defence, Jeremy Johnson QC argued in return that the police have a common law power to use visual imagery for the ‘prevention and detection of crime’. He also denied that the use of AFR breached privacy law or Article 8 of the UK’s Human Rights Act 1998. ‘So far as the individual is concerned, we submit there is no difference in principle to knowing you are on CCTV and somebody looking at it,’ Johnson told the court.

The ruling, expected at the beginning of September, could have wide-ranging implications for the policing of public places in the UK – and potentially for the use of AFR devices in airports, shopping centres, on mobile phones and in corporate buildings.

While only two other UK police forces have trialled AFR – the Metropolitan and Leicestershire Police – the technology is spreading rapidly. Analyst Mordor Intelligence estimates that the global facial recognition market was worth about $4.5bn in 2018 and will double to about $9bn by 2024. Fast-growing areas include Chinese and US government security programmes, surveillance systems and multi-factor identification processes in both devices and buildings.

In many parts of the world, the technology is coming of age precisely at a time when increased data privacy regulations are springing up

From a legal perspective, AFR can be seen as an extension of closed-circuit television (CCTV). CCTV has been in widespread use since the 1970s, when systems began video-recording live images of streets, shops and private premises. That technology was, and is, covered by data privacy laws and additional, national regulations cover the use of the technology itself depending on which part of the world people are in.

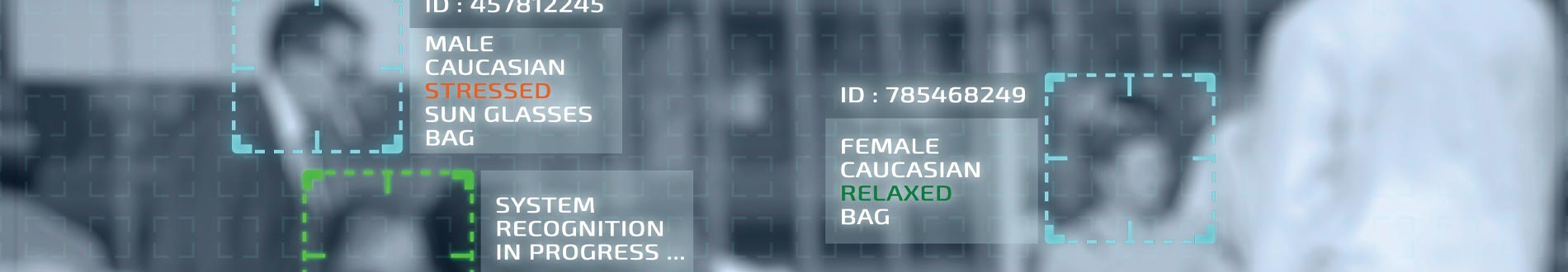

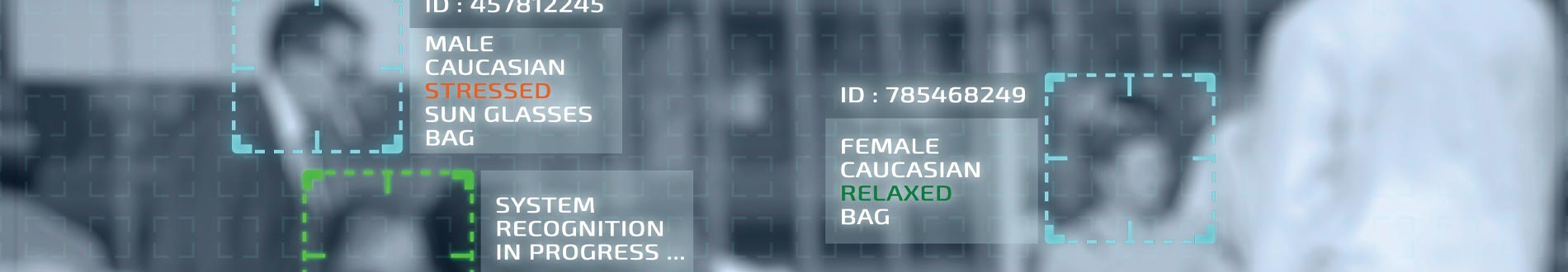

AFR adds sophisticated data processing into the mix, however. An algorithm maps the salient features of faces entering a camera’s field of view and creates a ‘facial fingerprint’. These fingerprints can be automatically compared to those on a stored database of faces. The results are typically listed according to how closely the algorithm identifies a match between the two images.

Problems with bias

AFR is not without technical and social problems. First, cameras struggle in low light. Poor angles of vision, or people wearing sunglasses, hats and other accessories, can make individuals hard to identify. Police custody databases can be a mixed bag – from clear full-frontal portrait shots to drawings. Algorithms vary widely in quality so that true positive identification rates depend both on the software used and how well people in organisations have been trained to use the kit.

More worryingly, databases can show gender and racial biases. Algorithms learn what a face is by repeated exposure to huge facial databases. Many of those image sets comprise mostly white males and, in those circumstances, the AFR may have problems recognising females and people of colour. Joy Buolamwini, for instance, an African-American former researcher at Massachusetts Institute of Technology’s Media Lab and founder of the Algorithmic Justice League, discovered that ARF systems ‘recognised’ her better when she wore a white mask.

In addition, some people seem to have what may jokingly be described as a ‘guilty face’.

The South Wales Police system produced multiple ‘false positives’ of one or two individuals – described in the jargon as ‘lambs’ – meaning that they could have been stopped on multiple occasions for no other reason than that the AFR had selected them.

Law enforcement authorities have been banned from using the technology in both San Francisco and Somerville, Massachusetts. The bans come in the context of a growing public distrust of the surveillance capitalism of big tech firms, as well as worries about the threat to civil liberties from a state modelled on Big Brother – the all-seeing state spying system in George Orwell’s novel 1984.

Privacy laws versus facial recognition

In many parts of the world, the technology is coming of age precisely at a time when increased data privacy regulations are springing up. Europe’s General Data Protection Regulation (GDPR), which requires that organisations collate and process data only with the clear, informed consent of individuals, could stem the worst excesses of AFR. While shops and leisure facilities all have the ability to upgrade their security surveillance systems to include AFR, the issue of consent could prove problematic. Since consent has to be as easy to give as to withdraw, AFR in such places could prove too difficult to manage. Legislation similar to GDPR – such as the California Consumer Privacy Act of 2018 – is likely to set the legal hurdle high for businesses deploying the technology in regions with strict privacy laws.

There is no legislation specifically designed to regulate the use of AFR. In Europe, in addition to the GDPR, citizens are protected under the Convention on Human Rights, as well as the Convention for the Protection of Individuals with regard to Automatic Processing of Personal Data.

Søren Skibsted, Co-Chair of the IBA Technology Law Committee and a partner at Kromann Reumert, is sceptical that there will be any short-term move to create technology-specific legislation. ‘In the longer term, as the technology develops and its use becomes more sophisticated, you would expect to see GDPR-like regulations designed specifically for AFR,’ he says.

Skibsted believes more should be done, and would like to see public awareness-raising campaigns detailing how and when law enforcement agencies and private companies can legitimately deploy AFR, for instance. In addition, he says that members of the public should have more effective ways of complaining if they think organisations are not compliant.

‘There should be governmental organisations with specific oversight of AFR, so you can complain as a private citizen rather than having to go to the courts,’ he says. This would need to be backed up with substantial resources because ensuring compliance, he believes, ‘could be a massive job’. Currently, in Europe, people may complain to their local information regulator – or go to the courts – but because the area is relatively untested, many may not appreciate the choices available and the implications of those choices.

Public opinion is likely to play a big role in how far organisations are able to adopt AFR technologies. While there is an obvious public interest element to law enforcement agencies using AFR in specific circumstances – although this assumption is now being tested in both the UK and US, as we have seen – selling the idea to retail customers is likely to be a tougher job. As AFR becomes more ubiquitous, treating people as a means to an organisation’s own ends is apt to become less acceptable.

Arthur Piper is a freelance journalist. He can be contacted at arthurpiper@mac.com