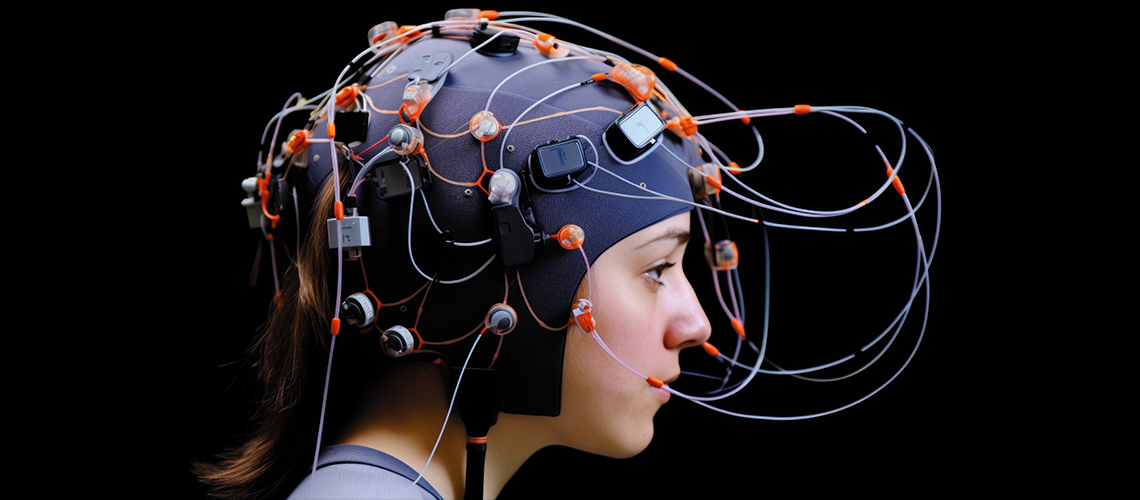

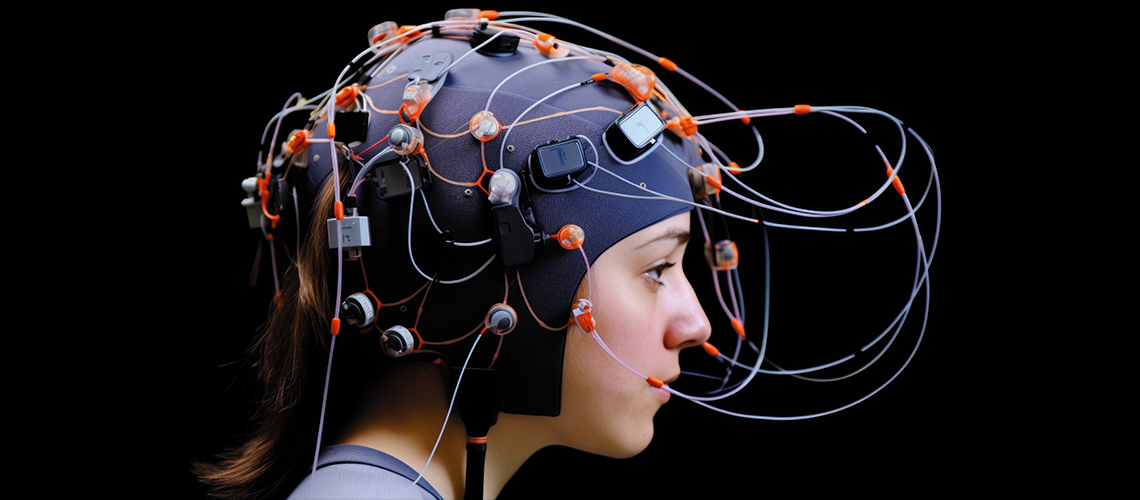

Human rights: advances in neurotechnology lead to calls for protection against abuse of ‘brain data’

Sara ChessaThursday 26 October 2023

Neurotechnologies are becoming increasingly able to decode our innermost self, threatening the ability of existing human rights frameworks to protect individual liberty and privacy. The term ‘neurorights’ indicates new rights to be integrated within national and international law to protect the mental neurocognitive sphere from the deep interference made possible by brain–computer interfaces and other technologies interacting with the neural system.

Such devices are used predominately in the medical field or in the military. However, in the future, technological cognitive enhancement may be available to individuals. Given the potential for unwanted intrusions into the mental sphere that neurotechnologies make possible, there’s a growing awareness of the need for a governance framework.

Faced with the ethical challenge of determining the conditions under which it’s legitimate to interfere with another person’s neural activity, Marcello Ienca, Assistant Professor of Ethics of AI and Neuroscience at the TUM School of Medicine and Health in Munich, and Roberto Andorno, Associate Professor of Biomedical Law and Bioethics at the Law Faculty of the University of Zürich, have identified four possible neurorights. The first is the right to mental privacy, allowing individuals to secure neural information from unwanted access. The Italian privacy regulator, for example, is among those concerned about mental privacy violations and is organising a conference on the dangers inherent in ‘neuroimaging’, which is becoming so advanced that it might soon be recognised as ‘mind reading’.

The second right relates to psychological continuity. Neural devices can be used for stimulating brain function or modulating it – an example being transcranial direct current stimulation (tDCS) devices, which generate a constant, low current delivered to a specific brain area via electrodes on the scalp. The alterations such devices produce in brain function can positively affect a patient’s condition. Given the therapeutic effectiveness of similar technologies, the use of brain stimulation devices will likely expand beyond the psychiatric field. However, tDCS devices may cause unintended alterations and affect an individual’s self-perception.

Before we think about creating new laws, we should look at the existing ones and consider whether, and to which extent, we really need new laws

Monika Gattiker

Vice Chair, IBA Healthcare and Life Sciences Law Committee

In a study involving patients treated with a technology called DBS, more than half of participants expressed a feeling of unfamiliarity with themselves after surgery, saying for instance: ‘I have not found myself again after the surgery’. Additionally, memory engineering technologies may have an impact on an individual’s identity by selectively removing, altering, adding or replacing memories relevant to self-recognition. This right to psychological continuity, therefore, seeks to preserve the individual’s identity.

The third proposed neuroright concerns mental integrity. Article 3 of the EU’s Charter of Fundamental Rights states that ‘everyone has the right to respect for their physical and mental integrity’. It requires, for example, the free and informed consent of the patient. Andorno and Ienca suggest reconceptualising this right in such a way as to protect individuals from non-authorised intrusions that have a direct impact on their neural computation and thus cause harm.

Finally, Andorno and Ienca outline the right to cognitive freedom, to safeguard the ability to make free and competent decisions on the use of neurotechnologies. Adults should be free to use brain/computer interfaces and similar devices for both medical reasons and cognitive enhancement purposes so long as they don’t cause damage to the freedoms of others.

Mental privacy issues in the neurotechnology era were discussed during the Royal Society Summit on Neural Interfaces, held in mid-September in London. Ienca, among the speakers, tells Global Insight that ‘as the ecosystem of consumer neurotechnologies is expanding beyond biomedical research and clinical intervention, very extensive banks of neural data are being created and cross-referenced with other data related to online behaviour’. In some cases, this is happening because commercial companies have policies allowing data transfer to third parties. In other cases, large technology conglomerates have acquired neurotechnology companies, meaning that neural data banks are directly available. ‘What we learnt in the last 20 years about Big Data is that large datasets are retrospectively analysed in such a way that inferences can be made even when the data has been sufficiently de-identified’, says Ienca.

He describes a second problem – the decoding of neural data without understanding the subject. ‘Artificial intelligence in recent years has made great strides, and we now have neural network models that can decode the content of mental states’, he explains. ‘We are talking about visual, auditory and even semantic content, in other words, reconstructing a person’s thoughts from neural data.’

‘Before we think about creating new laws, we should look at the existing ones and consider whether, and to which extent, we really need new laws’, says Monika Gattiker, Vice Chair of the IBA Healthcare and Life Sciences Law Committee and a partner at Lanter in Zürich. Gattiker highlights, for instance, that Articles 10 and 13 of the Swiss Constitution provide protection against ‘mind reading’. She explains that ‘the privacy of thoughts goes beyond the privacy, for example, of a letter. If a person agrees to neuroimaging, the data protection laws set the limits with regard to collecting the information/results’.

Then there are relevant supranational regulations, specifically the Council of Europe’s Convention for the Protection of Human Rights and Dignity of the Human Being regarding the Application of Biology and Medicine. Gattiker believes there may be a need for laws to be amended, but doesn’t think ‘we need to extensively regulate the neurotechnologies at this point […] however, the existing laws should be applied and enforced, and developments closely monitored’.

Anurag Bana, Senior Legal Adviser in the IBA’s Legal Policy & Research Unit, says that whether we choose to amend existing laws or create a new set of rules, the crucial step is a discussion between all stakeholders. He highlights the impact assessments in respect of human rights due diligence included within the UN Guiding Principles on Business and Human Rights, which call for an assessment of which areas are directly affected. ‘We need to understand risks and opportunities and, therefore, the companies that are investing should take a pledge that they will be really following the basic standards of protecting rights and obligations: they should take it on [themselves] when they are developing this technology’, he says.

So far, the only country that has included neurorights in its constitution is Chile. Carlos Amunátegui Perelló, Professor of Legal Theory and Artificial Intelligence at the Pontifical Catholic University of Chile, was among the scholars advising the Chilean government. ‘It is crucial to regulate neurotechnologies now because it is always easier to prevent a problem than to fix it’, he says. ‘The possible pervasiveness of brain–computer interfaces will have such profound effects that they will be difficult to control.’ Amunátegui Perelló adds that access to people’s brain data on a massive scale means that large companies will have an incredibly detailed picture of how our brains work and, therefore, ‘the possibility to control our emotions, thoughts and decisions’.

Image credit: Joe P/AdobeStock.com